Pathomation: Quod AI (What about AI)?

A few months ago we published a blog article on how PMA.studio could be used as a platform in its own right to design bespoke organization-specific workflow and cockpit-solutions.

In the article we talked about LIMS integration, workflow facilitation and reporting.

We didn’t talk about AI.

We have separate articles though in which we hypothesize and propose how AI – Pathomation platform integration could work; see our YouTube videos on the subject.

So when we recently came across one AI provider with a stable and well documented API, we jumped at the opportunity to pick up where we left off: and we built an actual demonstrator with PMA.studio as a front-end, and the AI engine as a back-end engine.

AI and digital pathology

At a very, very, VERY high level, here’s how AI in digital pathology works:

- The slide scanner takes a picture of your tissue at high resolution and stores it on the computer. The result of this process is a whole slide image (WSI). So when you look at the whole slide images on your computer, you’re looking at pixels. A pixel is very small (about 2 – 3 mm on your screen, if you really have to know).

- You are a person. You look at your screen and you don’t see pixels; they blend together to see images. If you’ve never looked at histology before, WSI data can look like pink and purple art. If you’re a biology student, you see cells. If you’re a pathologist, you see tumor tissue, invasive margins, an adenocarcinoma or DCIS perhaps.

- The challenge for the AI, is make it see the same things that the real-life person sees. And it’s actually much harder than you would think: for a computer program (algorithm), we really start at the lowest level. The computer program only sees pixels, and you have to teach it somehow to group those together and tell it how to recognize features like cells, or necrotic tissue, or the stage of cell-division.

It’s a fascinating subject really. It’s also something we don’t want to get into ourselves. Not in the algorithm design at least. Pathomation is not an AI company.

So why talk about it then even at all? Because we do have that wonderful digital pathology software platform for digital pathology. And that platform is perfectly suited to serve as a jumping board to not only rapidly enrich your LIMS with DP capabilities, but also to get your AI solution quicky off the ground.

If you’re familiar with the term, think of us a PaaS for AI in digital pathology.

Workflow

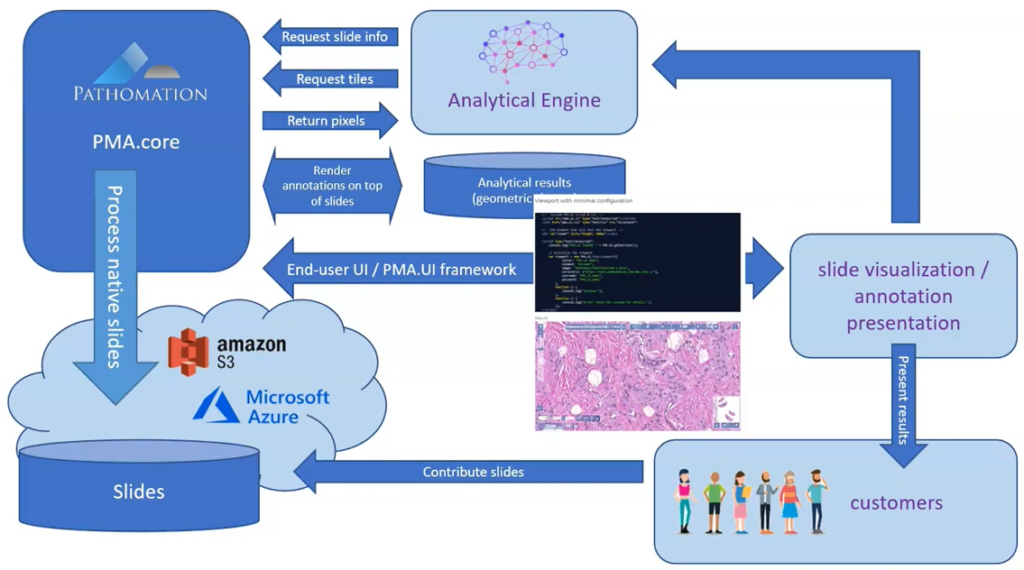

In one of our videos, we present the following abstract workflow:

Now that we’ve established connections to an AI providers ourselves, we can outline a more detailed recipe with specific steps to follow:

- Indicate your region(s) of interest in PMA.studio

- Configure and prepare the AI engine to accept your ROIs

- Send off the ROI for analysis

- Process the results and store them in Pathomation (at the level of PMA.core actually)

- Use PMA.studio once again to inspect the results

Let’s have a more detailed look at how this works:

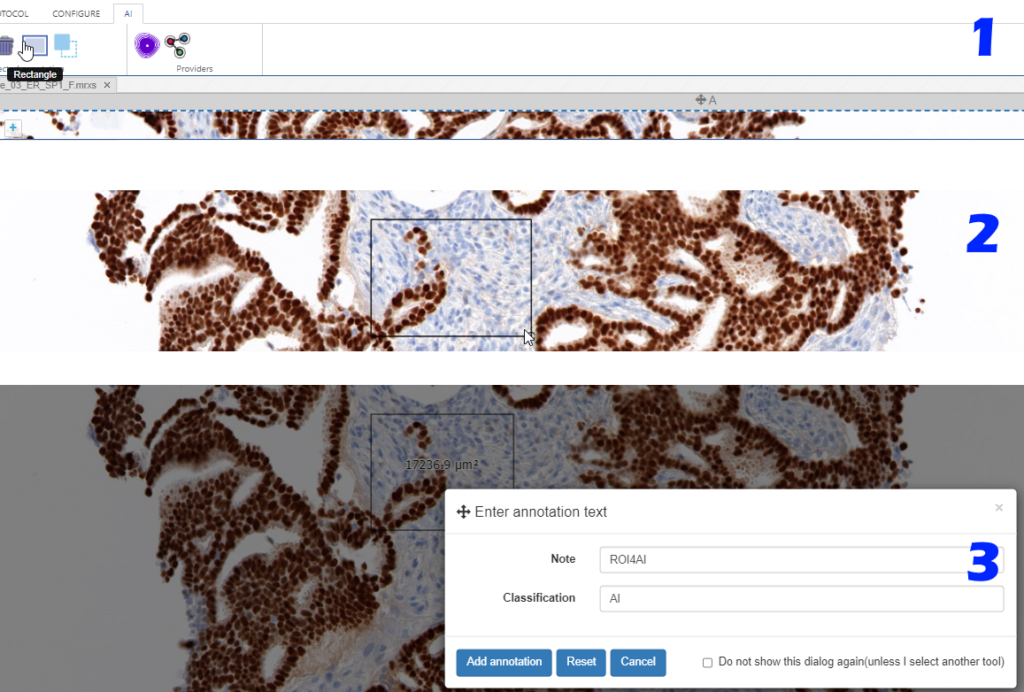

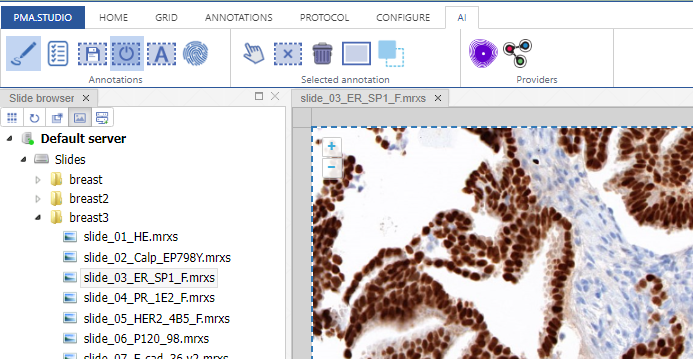

Step 1: Indicate your regions of interest

With the rectangle tool on the Annotations tab of the ribbon, you can draw a region of interest.

Don’t worry about the resolution (“zoomlevel”) at which you make the annotation; we’ll tell our code later on to automatically extract the pixels at the highest available zoomlevel. If you have a slide scanned at 40X, the pixels will be transferred as if you made the annotation at that level (even though you made have drawn the region while looking at the slide in PMA.studio in 10X).

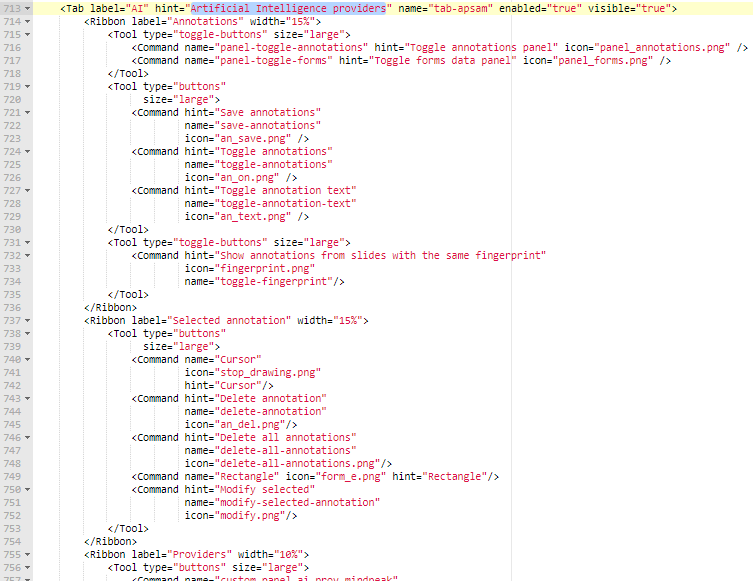

You can actually configure a separate AI-tab in the back-end of PMA.studio, and repeat the most common annotation tools you’ll use to indicate your regions of interest: if you know you’ll always be drawing black rectangular regions, there’s really no point having other things like compound polygon or a color selector:

Step 2: Configure the AI engine for ingestion

We added buttons to our ribbon to interact with two different AI engines.

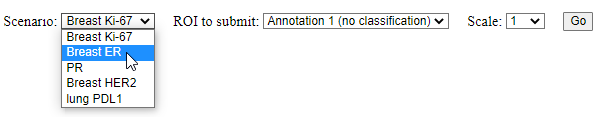

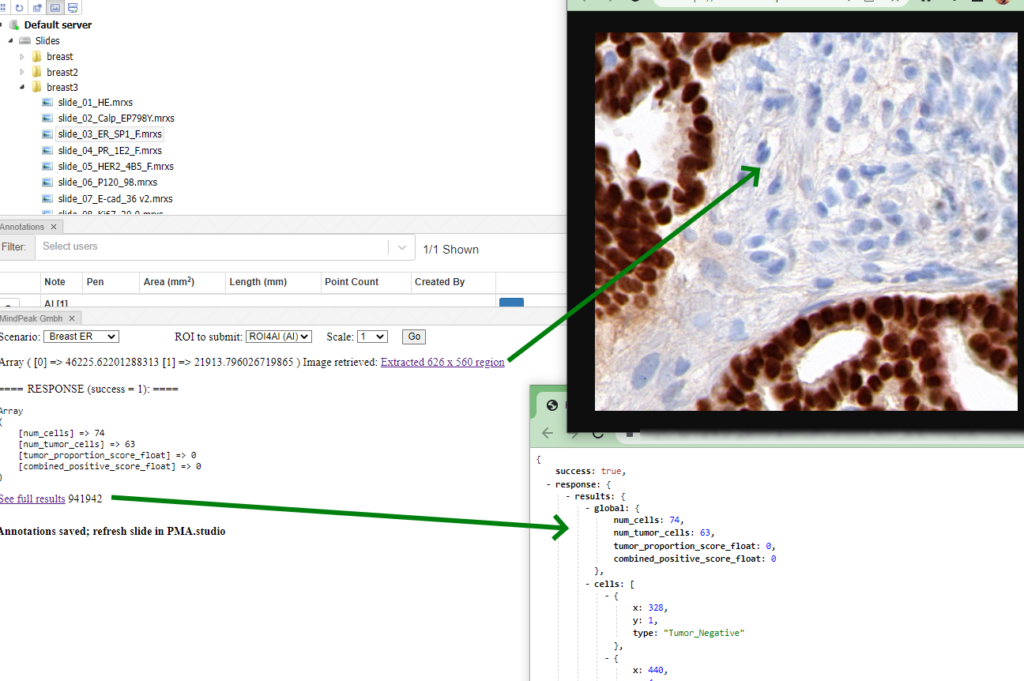

When clicking on one of these buttons, you can select the earlier indicated region of interest to send of for analysis, as well as what algorithm (“scenario” in their terminology) to run on the pixels:

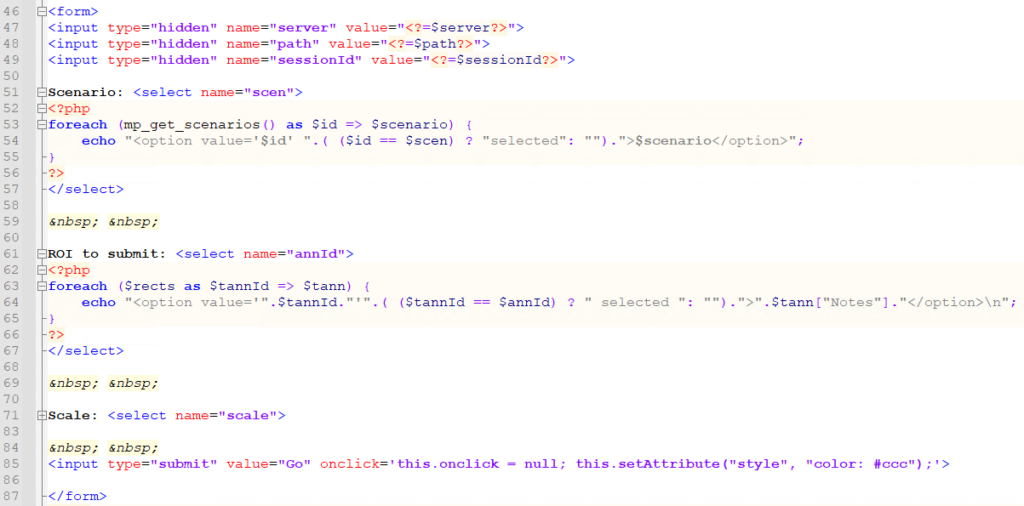

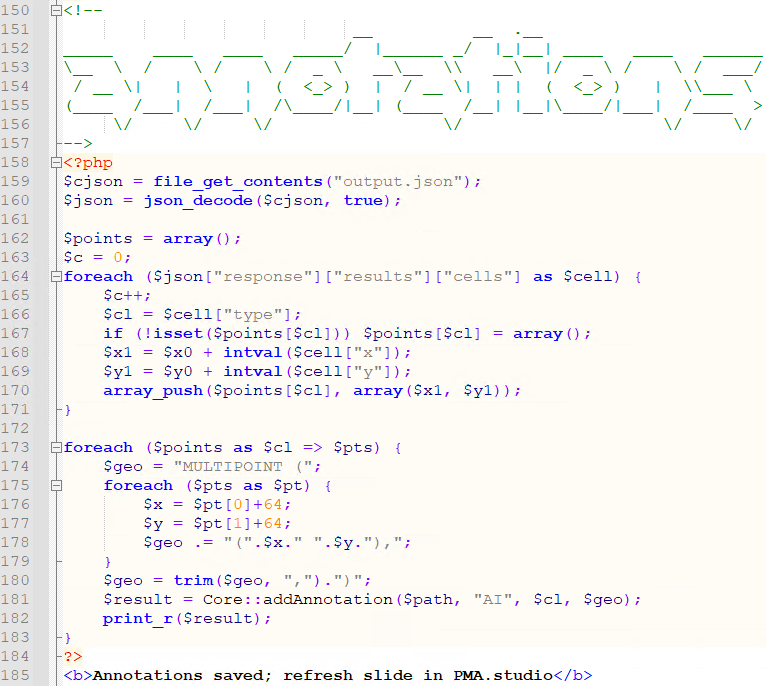

The way this works behind the scenes: the panel that’s configured in the ribbon ends up loading a custom PHP script. Information about the current slide in PMA.studio is passed along through PMA.studio’s built-in mechanism for custom panels.

The first part of the script than interacts both with the AI provider to determine which algorithms are available, as well as with the Pathomation back-end (the PMA.core tile server that hosts the data for PMA.studio) to retrieve the available ROIs:

Step 3: Extract the pixels from the original slide, and send them off the AI engine

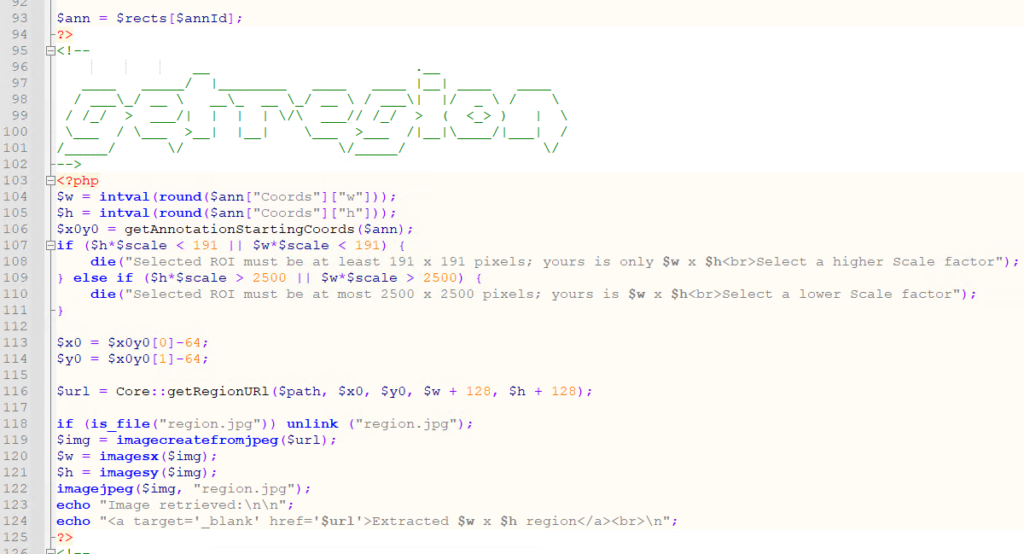

First, we use Pathomation’s PMA.php SDK to extract the region of pixels indicated from the annotated ROI:

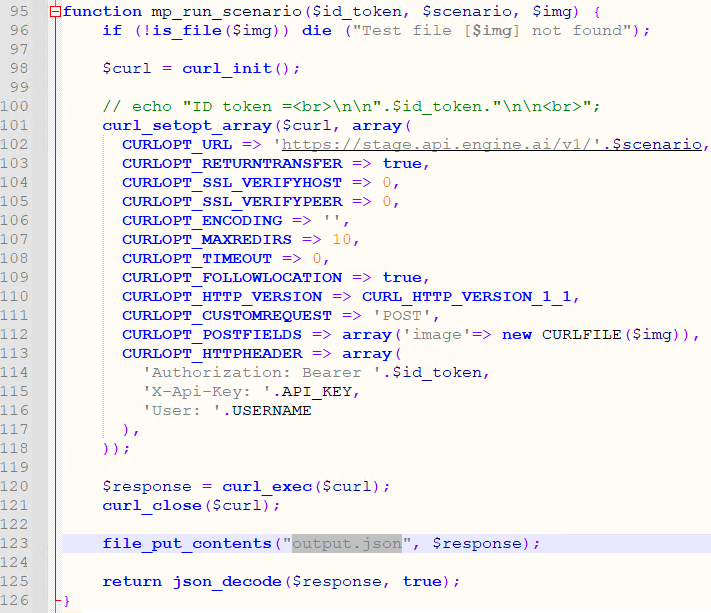

We store a local copy of the extracted pixels, and pass that on to the AI engine, using PHP’s curl syntax:

Step 4: Retrieve the result and process it back into PMA.core

Finally, the returned JSON syntax from the AI engine is parsed and translated (ETL) into PMA.core multipoint annotations.

A form is also generated automatically to keep track of aggregate data if needed:

Step 5: Visualize the result in PMA.studio

The results can now be shown back into PMA.studio.

The data is also available through for the forms panel and the annotations panel:

What’s next?

How useful is this? AI is only as good as what people do with it. In this case: it’s very easy to select the wrong region on this slide where a selected algorithm doesn’t make sense to apply in the first place. Or what about running a Ki67 counting algorithm on a PR stained slide?

We’re dealing with supervised AI here, and this procedure only works when properly supervised.

Whole slide scaling is another issue to consider. In our runs, it takes about 5 seconds to get an individual ROI analyzed. What if you want to have the whole slide analyzed? In a follow-up experiment, we want to see if we can parallelize this process. The basic infrastructure at the level of PMA.core is already there.

Demo

The demo described in this article is not publicly available, due to usage restrictions on the AI engine. Do contact us for a demonstration at your earliest convenience though, and let us show you how the combination of PMA.core and PMA.studio can lift your AI endeavors to the next level.